Buy it, use it, break it, fix it, trash it, change it, mail, upgrade it

Hello hello. Running 2/2 for weekly updates with this one.

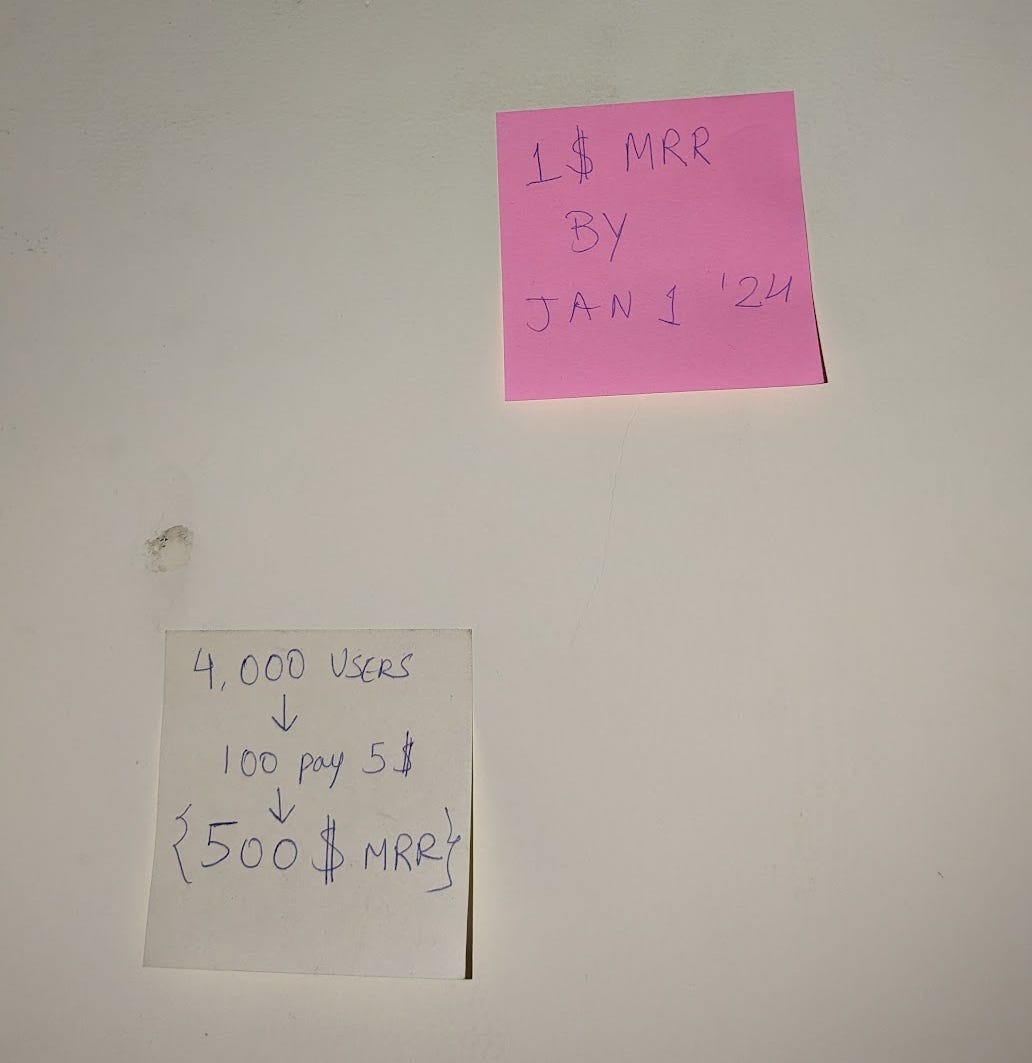

I have a timeline for myself. It’s the one in pink.

That leaves me with 57 days as of writing this. This means I gotta build out the import feature, add a payment integration, fix+improve the landing page, start launching, add caching, and work on the database (idek what exactly to do here, but ik I have to fix something).

Yeahhhh, the to-do list is a bit long. Speaking of which I started using Linear to “manage” my “project”. I hope to stick to it for at least this launch so that everything is in one place.

I had a pretty good chat with Tom over at plzsend.help about taking help from others, my timeline up till January, and how to start planning for things after that. Turned out to be quite helpful actually. So much happens in a day nowadays, I’m considering journalling some basic pointers every day. I lose track so easily. Is this my Diary of a Wimpy Kid era?? (a JOURNAL not a DIARY)

Frontend Updates I’m Excited To Share Because It’s New To Me:

Okay so, ChatGPT Plus is 100% worth the money. I drew something on a paper and asked it to generate a NextJS component for me.

And this is what I ended up with. I may be slowly becoming a shittier coder because writing this from scratch would have been freaking annoying. I’m hella glad for this though. I still had a long way to go.

Next, I had to read the bookmarks through the extension + chrome.bookmarks API. I had to parse the bookmark tree, save them in the state along with some more info, like their checked state.

Once that was done I just rendered the tree as below. Shadcn components are really helpful. Styling this to look even as usable as this was much harder than I thought.

I also started working on the pipeline to download the bookmarks users would send in through this. Because scraping the websites is an uphill battle to get right, out of 1332 of my bookmarks 195 websites just outright don’t load or soft block the scraper.

The battle for machine readability of the internet.

I started with using Playwright to load up these pages and I was injecting my own JS to extract the page content. But I saw that ads were coming in too, way too many times. After a little bit of research, I stumbled upon Readability, a library that powers the Reading Mode in Firefox.

There’s a cool article that explains how it works over here: How does Firefox's Reader View work? and a cool tool to convert websites into text over here: Text-Mode.

Reading a bit more about reading mode and I also stumbled over Web 3.0 (and no, I didn’t just find out about NFTs). Wish it had worked out though :/

Anyhoo, coming back to my scraping problems, out of 1331 websites, 195 of them gave me problems in scraping them. I’m gonna work on getting that number close enough to 0.

I could also look into maybe caching some websites that are saved by people for performance gainzz. But that’s an optimization problem for the future me.

n&w s5 + music for da week.

I applied to buildspace n&w s5. If you’re not sure what that is their website does a pretty good job of explaining it.

This is what I’m listening to as I write this. Apparently, Southern Nights by Glen Campbell is actually a cover of the 6th track off of this album.

Well, that’s it for this week!

glhf.